I've started on the implementation of occlusion mapping in PBRT. Adding a new shader is quite easy in PBRT. You only need to add another class that extends the

SurfaceIntegrator class. Since my approach is similar to photon mapping, I could reuse a lot of code from the photon mapping class.

In my implementation, I programmed three rendering techniques and three techniques to distribute the occlusion photons through the scene. In the following sections, I'll discuss their implementation and their impact on the rendered images.

Terminology

I'd like to start by going through the terminology that I will use in this (and future) blog posts:

- Occlusion map: a kd-tree which stores light and occlusion photons for a specific light source.

- Light photon: a photon that indicates that a position in the scene is visible by a light source.

- Occlusion photon: a photon that indicates that a position is occluded. It also stores a list with all the occluders of that position.

- Occlusion ray: a ray which is traced through the scene and creates the light and occlusion photons. Depending on the technique, it will create a light photon upon it's first intersection. On the subsequent intersections, occlusion photons will be created.

Implementation

I described two different approaches to occlusion mapping, as described in the previous blog post on

Occlusion Mapping; one with light photons and one without light photons. I implemented both of them.

Furthermore, I added one more approach in the implementation which I got from the paper "Efficiently rendering shadows using the photon map" by Henrik Wann Jensen et al. In this paper they approximate the visibility by using the ratio of light photons versus light plus shadow photons.

visibility = #light photons / (#light photons + #shadow photons)

The occlusion photons can be distributed in a different number of ways through the scene. I implemented three ways for generating the occlusion photons: from the light source, from the camera and uniform over the surfaces.

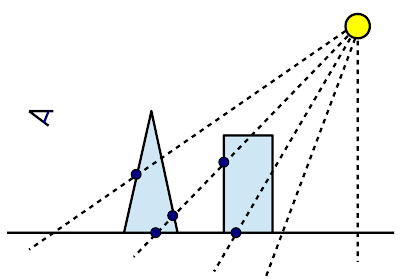

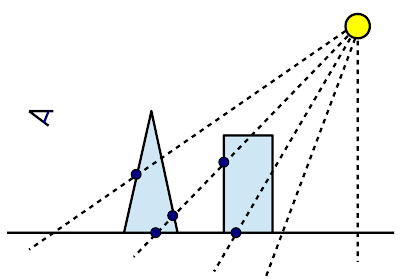

Occlusion rays from the light source

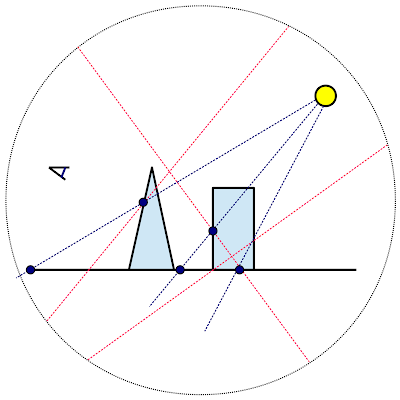

Occlusion rays are traced through the scene a light sources and a halton sequence is used to determine a random position and direction for the occlusion ray. The light source is selected using a cumulative distribution function based on the power of the light sources (stronger lights receive more photons). On each intersection excluding the first, an occlusion photon is generated. Figure 1 displays this approach:

|

Figure 1: Light source sampling. Occlusion rays (striped lines) are shot from the light source.

Upon each intersection, except for the first intersection, an occlusion photon is stored. |

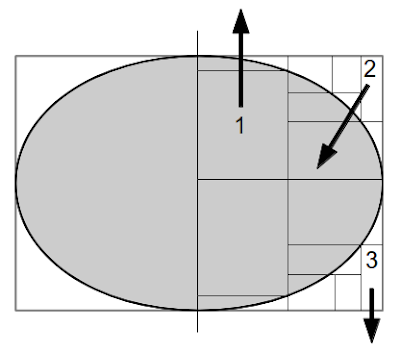

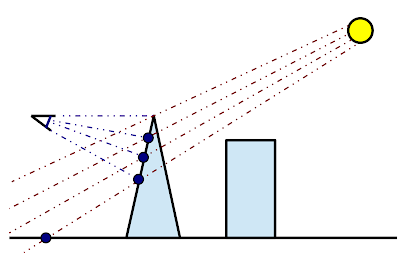

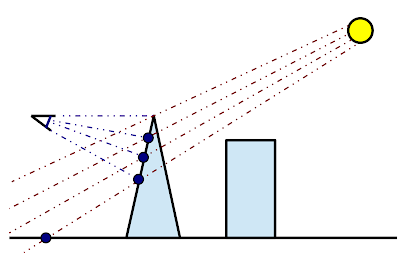

Occlusion rays from the camera

Camera rays are traced randomly from the camera's viewpoint. The first intersection point of this ray will be used to determine the direction to shoot occlusion rays to. The origin of the occlusion ray is determined by selecting a random point on a light source. The light source is selected by the cumulative distribution function of the power of the light sources. This technique is displayed in figure 2:

|

Figure 2: Camera sampling. First camera rays (blue lines) are traced from the camera. Their

first intersection points are used to determine the direction of the occlusion rays (red lines).

The origin of the occlusion rays is determined by taking a point on a light source using

a cummulative distribution function. |

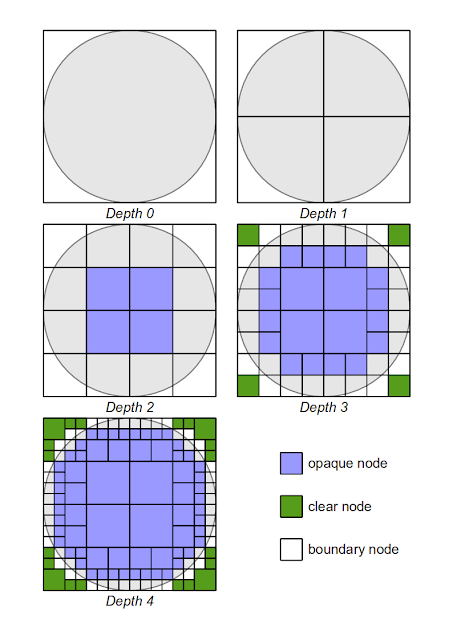

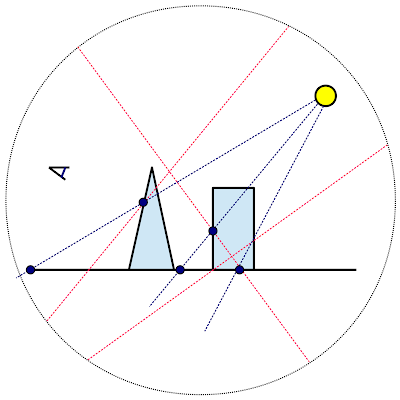

Uniform occlusion rays

With this technique we want the density of the occlusion photons to be evenly distributed through the scene. To accomplish this, I used global line sampling (since PBRT does not allow you to acces the geometry of the scene directly). With this technique, random rays are shot through the scene and each surface has a chance proportional to it's surface area to be hit.

First the bounding sphere of the scene is determined. Then two points are randomly generated on this sphere and a ray connecting these two points is shot through the scene.

One of the intersection points of these rays is randomly selected, and chosen to be the direction to shoot the occlusion rays to. The origin of the occlusion rays is determined as described in the previous subsection; by choosing a random point on a light source.

This technique is shown in figure 3:

|

Figure 3: Uniform sampling. Random rays (red lines) are shot through the scene. One of their intersection

points is chosen and used as the direction to shoot occlusion rays (blue lines) to. The origin of the

occlusion rays is determined by choosing a random point on the light source. |

Results

The images below show some of the results along with the used parameters and rendering times. All of the scenes were rendered on the following hardware: Intel Core i7 - 2630QM at 2.0 Ghz, 6GB of DDR3 running Ubuntu 12.04 (64-bit).

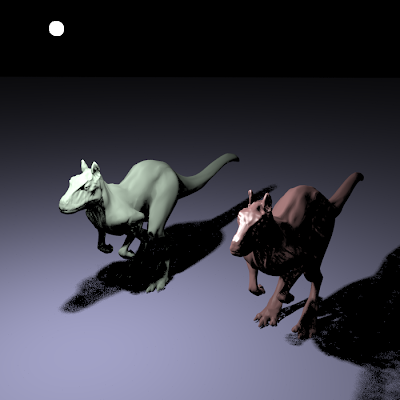

Comparison of the rendering techniques

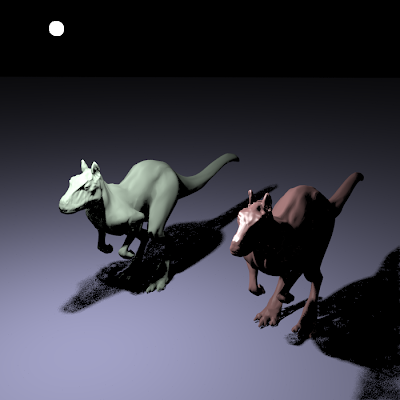

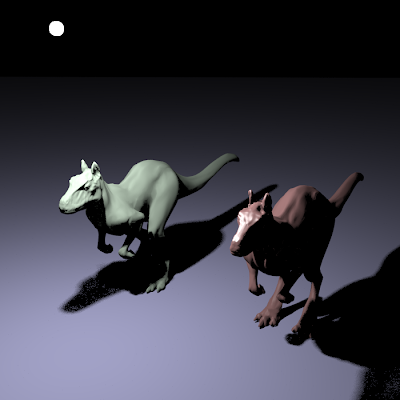

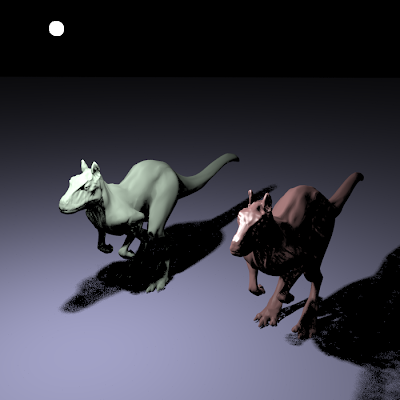

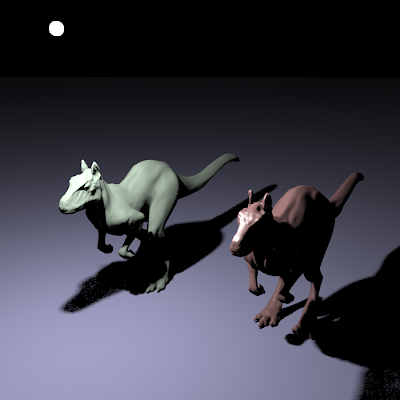

First I will compare the different techniques (occlusion mapping without light photons, occlusion mapping with light photons and the technique by Jensen) with each other. The results below are rendered with the three techniques and compared to a standard ray-traced image. The images show the killeroo scene that comes with PBRT, with 8 samples per pixel.

Rendering with 10,000 photons (light source radius 1)

|

|

|

Occlusion mapping without light photons.

Number of photons: 11903

Average number of occluders per photon: 1.90817

Occlusion shooting time: 0.118894s

Rendering time: 2.64184s

Total time: 2.76073s |

Occlusion mapping with light photons.

Number of photons: 25912

Average number of occluders per photon: 0.231283

Occlusion shooting time: 0.094949s

Rendering time: 2.63185s

Total time: 2.7268s |

|

|

|

Jensen's technique.

Number of photons: 25925

Average number of occluders per photon: 0.231514

Occlusion shooting time: 0.107828

Rendering time: 2.57188s

Total time: 2.67971s |

Direct lighting.

Total time: 14.2238s

|

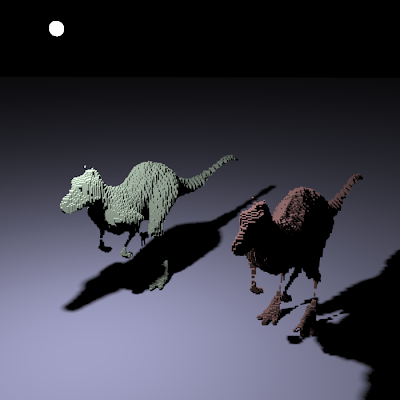

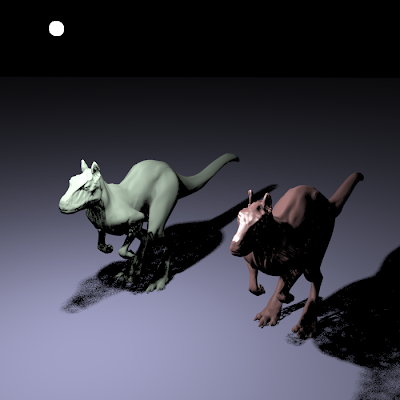

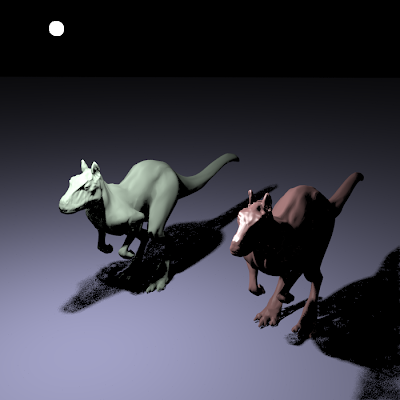

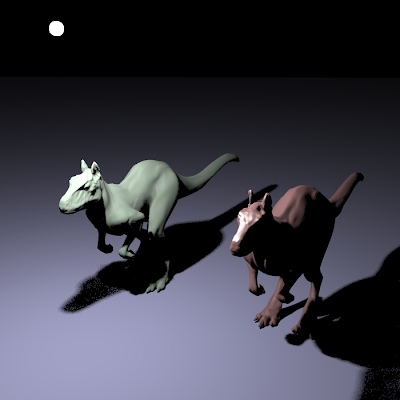

Rendering with 100,000 photons (light source radius 1)

|

|

|

Occlusion mapping without light photons.

Number of photons: 101844

Average number of occluders per photon: 1.89236

Occlusion shooting time: 0.768156s

Rendering time: 3.19457s

Total time: 3.96273s |

Occlusion mapping with light photons.

Number of photons: 115409

Average number of occluders per photon: 0.225866

Occlusion shooting time: 0.188688s

Rendering time: 3.19719s

Total time: 3.38588s |

|

|

|

Jensen's technique.

Number of photons: 115350

Average number of occluders per photon: 0.225626

Occlusion shooting time: 0.184848s

Rendering time: 2.87288s

Total time: 3.05773s |

Direct lighting.

Total time: 14.2238s

|

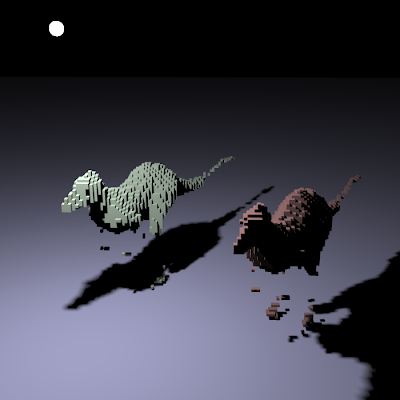

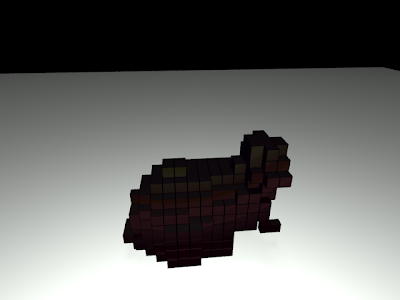

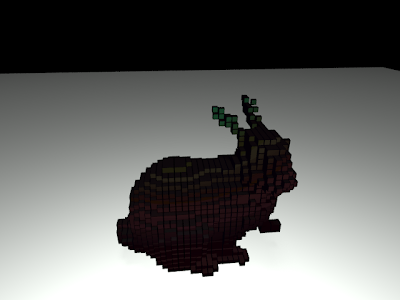

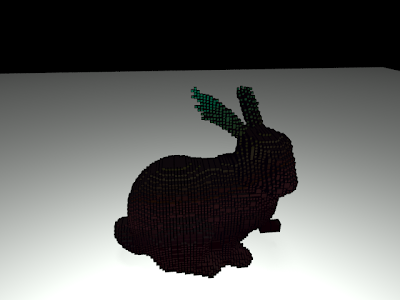

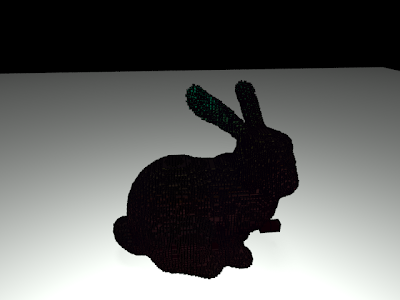

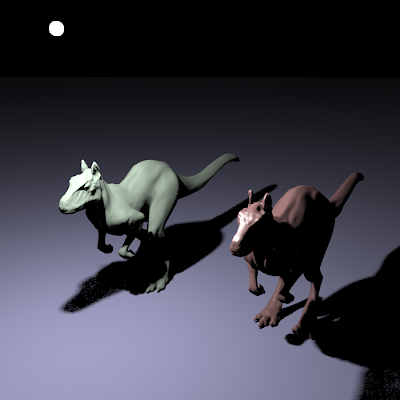

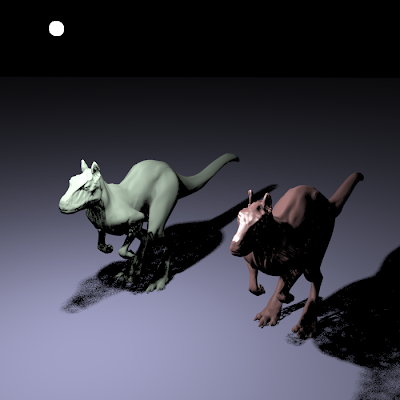

Rendering with 1,000,000 photons (light source radius 1)

|

|

|

Occlusion mapping without light photons.

Number of photons: 1001683

Average number of occluders per photon: 1.8944

Occlusion shooting time: 8.53205s

Rendering time: 6.40291s

Total time: 14.9935s |

Occlusion mapping with light photons.

Number of photons: 1015612

Average number of occluders per photon: 0.225866

Occlusion shooting time: 1.96708s

Rendering time: 6.92928s

Total time: 8.89636s |

|

|

|

Jensen's technique.

Number of photons: 115350

Average number of occluders per photon: 0.225626

Occlusion shooting time: 1.95626s

Rendering time: 5.60054s

Total time: 7.55659s |

Direct lighting.

Total time: 14.2238s

|

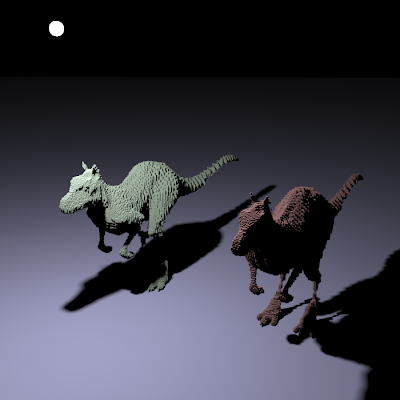

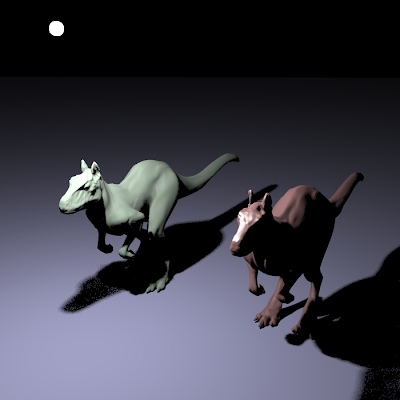

Rendering with 100,000 photons (light source radius 3)

|

|

|

Occlusion mapping without light photons.

Number of photons: 101675

Average number of occluders per photon: 1.89546

Occlusion shooting time: 0.786429s

Rendering time: 3.45047s

Total time: 4.2369s |

Occlusion mapping with light photons.

Number of photons: 115387

Average number of occluders per photon: 0.227209

Occlusion shooting time: 0.187175s

Rendering time: 3.13611s

Total time: 3.32329s |

|

|

|

Jensen's technique.

Number of photons: 115346

Average number of occluders per photon: 0.2269

Occlusion shooting time: 0.18869s

Rendering time: 2.96835s

Total time: 3.15704s |

Direct lighting.

Total time: 14.6854s |

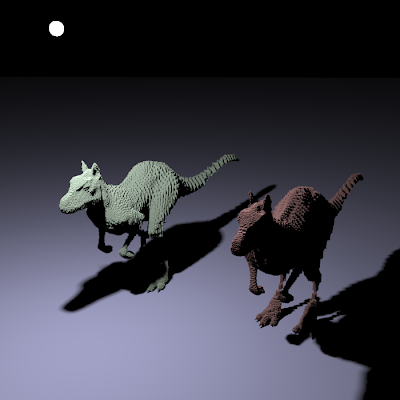

Rendering with 1,000,000 photons (light source radius 3)

|

|

|

Occlusion mapping without light photons.

Number of photons: 1001686

Average number of occluders per photon: 1.89493

Occlusion shooting time: 8.58199s

Rendering time: 6.75203s

Total time: 15.334s |

Occlusion mapping with light photons.

Number of photons: 115387

Average number of occluders per photon: 0.227209

Occlusion shooting time: 1.9558s

Rendering time: 7.1825s

Total time: 9.1383s |

|

|

|

Jensen's technique.

Number of photons: 1014080

Average number of occluders per photon: 0.225214

Occlusion shooting time: 2.02617s

Rendering time: 5.78872s

Total time: 7.81489s |

Direct lighting.

Total time: 14.6854s |

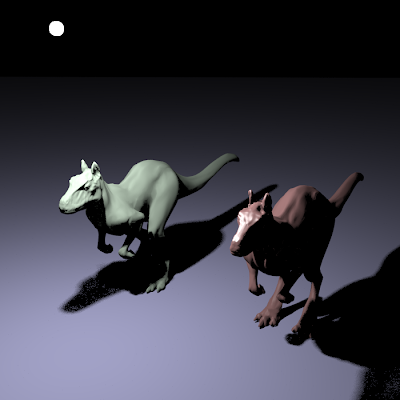

The above sequence of images shows that for a large number of occlusion photons the results converges to the correct image. Furthermore we can make the following remarks about the three techniques:

- Occlusion mapping without light photons is best at preserving the shadow boundaries. The difficulty with this technique is that when a small number of occlusion photons is created, light dots are formed inside the shadow (will be discussed further)

- Occlusion mapping with light photons partially solves this problem. Thanks to the light photons, shadow rays will only be traced in the penumbra regions (the soft shadow regions), because light photons will never occur in regions which are fully in shadow. The problem with this technique is that there is less information about the occluders in the occlusion map (the average number of occluders per photons is only around 0.2 per photon).

- Jenssens technique is only added for comparing the other two techniques with a technique that does not trace any shadow rays. The ratio of light photons versus light and occlusion photons is only a rough estimate. To get exact results a prohibitive number of photons would need to be stored and the lookup radius for the occlusion map should nearly be zero.

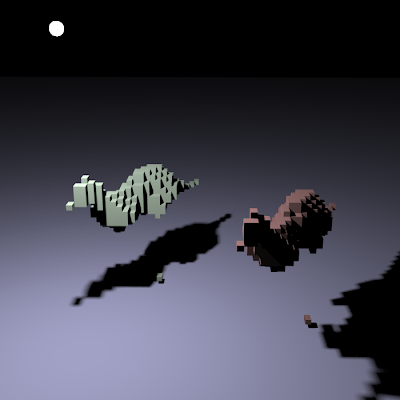

Comparison of the occlusion shooting methods

Finally we compare the methods for distributing the occlusion photon in the scene. For this we render the killeroo scene with 100,000 and 1,000,000 occlusion photons (no light photons).

Rendering with 100,000 occlusion photons

|

Light sampling

Average number of occluders per occlusion photon: 1.89528

Occlusion photon shooting time: 0.863877s

Render time: 3.36405s

Total time: 4.22793s |

|

Camera sampling

Average number of occluders per occlusion photon: 2,14348

Occlusion photon shooting time: 0.504893s

Render time: 3.96897s

Total time: 4,47387s |

|

Uniform sampling

Average number of occluders per occlusion photon: 1,87973

Occlusion photon shooting time: 25.7469s

Render time: 3.40524s

Total time: 29.1521s |

Rendering with 1,000,000 occlusion photons

|

Light sampling

Average number of occluders per occlusion photon: 1,87973

Occlusion photon shooting time: 8,52909s

Render time: 6,69704s

Total time: 15,2261s |

|

Camera sampling

Average number of occluders per occlusion photon: 2,14517

Occlusion photon shooting time: 3,59355s

Render time: 6,98851s

Total time: 10,5821s |

|

Uniform sampling

Average number of occluders per occlusion photon: 1,87756

Occlusion photon shooting time: 298,16s

Render time: 6,97551

Total time: 305,135s |

From the resulting images we can conclude that camera sampling is the most effective way. On average, more occlusion information is stored. Thanks to the camera driven construction of the occlusion map, we can be certain that occlusion photons are stored in visible locations. Finally we can see that the resulting images are better (compare the right flank of the right killeroo in the images).

Camera sampling is also more efficient in the time needed to construct the occlusion map. Light sampling wastes a lot of time shooting rays in directions that do not have any geometry. The global line sampling algorithm for uniform sampling also generates a lot of rays that do not intersect any geometry in the scene.

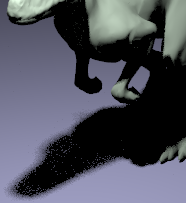

Conclusion

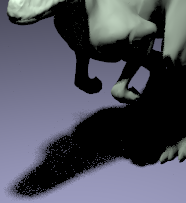

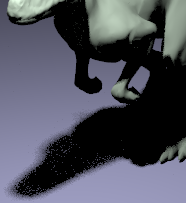

The current implementation still has some issues. The main issue that occurs are the light spots in the shadow regions, which can be seen in the figure below:

|

| Light spots in the shadow regions |

These light spots occur because some triangles in the mesh are never hit by any occlusion ray. This means that these triangles can never be tested for occlusion leading to false misses during rendering. This problem will become even more severe when the light sources are large.

A solution to this problem would be to create large volumetric occluders inside the models and to store these volumetric occluders inside the occlusion photons too. This will partially resolve the problem because altough occlusion rays may miss some small triangles, they are less likely to miss the larger volumetric occluders. The volumetric occluders can also be used to increase the performance. Since it is more likely that a volumetric occluder is hit than that a triangle of the mesh is hit (since volumetric occluders are usually large), we can choose to intersect the volumetric occluders first (which are cheaper to intersect).

Occlusion mapping with light photons also solves the problem of the light spots in the shadow regions.

No shadow rays will be shot from regions that are in full shadow because they can't contain any light photons. Therefore the false misses are avoided. The downside is that when light photons are used, less information about occlusion is stored in the occlusion map for the same number of photons. (without light photons, the average number of occluders per photon is 1.8; with light photons, the average number of occluders per photon is 0.2)

Finally, we could also store neighbouring triangles of an occluder in an occlusion photon. This could affect performance, since the number of stored occluders will increase by a great amount.